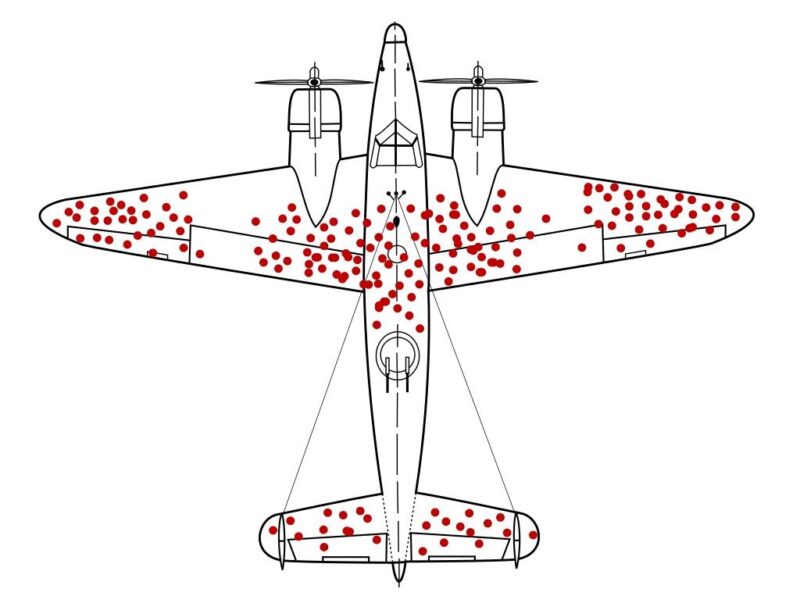

World War II. An important time for making sure your planes don’t get shot down by enemy fighters. Oftentimes, planes would return from battle covered with bullet holes. The damage wasn’t uniformly distributed across the aircraft. The data gave insight into where armour plating should be added. Adding armour, of course, comes with a significant trade-off: adding more armour made the planes less vulnerable to bullet damage, but it also makes the plane heavier, and heavier planes are less maneuverable and use more fuel. Armouring the planes too much is a problem; armouring the planes too little is a problem. So the traditional response was to find an optimum middle ground. And the bullet-holed aircraft data perhaps presented a way to manage the contradiction.

Enter mathematician, Abraham Wald. He was asked to look at the data. The Air Force was expecting to receive recommendations to strengthen the most commonly damaged parts of the planes to reduce the number that was shot down. Counter-intuitively, Wald had some rather different – contradiction-solving – advice: perhaps, he speculated, the reason certain areas of the planes weren’t covered in bullet holes was that planes that were shot in those areas did not return. This insight led to the armour being re-enforced on the parts of the plane where there were no bullet holes. A classic illustration of Inventive Principle 13, The Other Way Around.

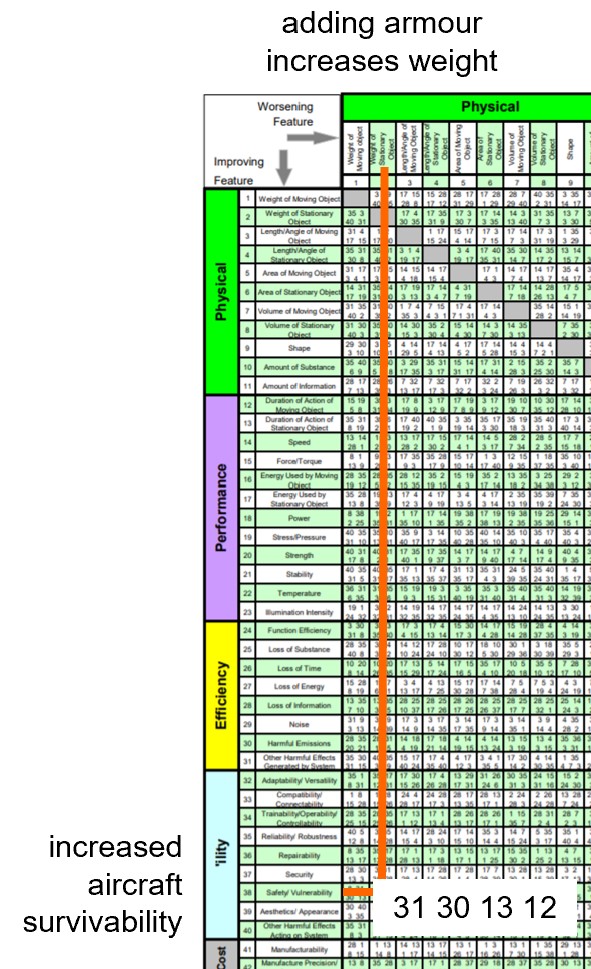

Wald didn’t have access to the Contradiction Matrix back in 1943, when his insight came to light. If he had, it would have told him something like this:

At first sight, Principle 13 is definitely a strange looking answer to a Safety-versus-Weight problem. And making best use of the strategy indeed demands a high degree of lateral thinking. What are we supposed to do the opposite of? How can we sensibly do the opposite of what the data is apparently telling us? Or, how might the missing pieces of data turn out to be more meaningful than the data we have?